Sound and video are, on the face of it, the most unremarkable ingredients in the new media arts.

Compared to smart textiles, bio-art, data visualisation or locative media, sound and moving image seem almost archaic. Yet as the work documented in this edition of Filter shows, these materials are anything but obsolete. This body of work reflects a dynamic practice, and one that has emerged in recent years as a real Australian strength. It’s not video art, though it has been known to fly that label as a flag of convenience; if anything its ties are closer to sound and music. Its distinguishing feature is its focus on, to recycle another archaic word, the audiovisual. The relation between sound and image is central here. The soundtrack, largely neglected in video art, is amplified; the moving image, taken for granted as “content” in an era of desktop video, is obliterated into sheer texture, or synthesised from scratch. Most importantly, sound and image fuse into new, tightly articulated wholes; they are cross-wired, cut or molded into blocks of something like raw sensation.

As the following articles show, this practice crosses, and links, diverse scenes and cultures; it draws on video and sound art, experimental music and improvisation, computer music and experimental film, live video and the VJ scene. It also reflects in part a rich historical tradition of abstract animation and “visual music”, as well as a specifically Australian history of audiovisual practice; Stephen Jones’ video work with Severed Heads looms large. But at the core of this collection is a focus on the point of audiovisual fusion – the moment of synchresis – and as I hope to explain below, that point of fusion, and the relation it describes, has a particular significance in digital media culture.

On the screen, pixels shift, a dark void moves, opening and closing. The speakers vibrate; pulses and lumps of tone and pitch, flecks of air and noise; a voice. The void belongs to a face; and the lumps, stops and pulses from the speaker meet in time with the openings and closings of the mouth. We sense a correlation, a tight coincidence between these disparate events, and we recognise, involuntarily, a common cause that seems to link them. A newsreader enunciates the headlines: her image and her voice, separated at the point of capture, technically distinct and independent in the video signal, re-embodied by different means, are finally reunited in our perception.

This is synchresis, in its everyday form: lip-sync, the perceptual trick at the representational core of screen culture. Film sound theorist Michel Chion, who coined the term, defined synchresis as “the spontaneous and irresistible weld produced between a particular auditory phenomenon and visual phenomenon when they occur at the same time.” [1] Lip-sync is its most ubiquitous form, but as Chion shows there are many others. The cinematic punch – what Chion calls the “emblematic synch point” – shows how synchresis can be fabricated, how a sound effect guarantees an event that “we haven’t had time to see” – but also an event that, in fact, never occurred.

The audiovisual cliché of the punch also hints at the wider potential of this audiovisual fusion. As Chion observes, synchresis works even with “images and sounds that strictly speaking have nothing to do with each other, forming monstrous yet inevitable and irresistible agglomerations in our perception.” With the perceptual impetus of synchronisation, anything will glue to anything, and in the process new and sometimes monstrous wholes are constructed. Scratch video, Milli Vanilli, and more recently the YouTube-powered renaissance in video ventriloquism have all demonstrated this at one level. Yet these cutups rely on synchresis reinforcing the cinematic representation of a body, a source, a redundant, all-too-obvious shared cause. The audiovisual practice presented here offers some powerful alternatives.

Ian Andrews’ Spectrum Slice is constructed on a temporal grid – a field of tripwires that trigger Chion’s monstrous agglomerations. The video track is chaotically intercut, but the edits are locked to the grid’s pulse. The same pulse structures the audio track, as Andrews punches live radio samples into a loop. Tiny, random chunks of degraded video and radio noise weld into new units. But in this recombinant cutup, staggered loops and refrains also move against

each other: synchretic welds are formed and reinforced only to be broken and shifted. This is synchresis at work; and though it’s constantly apparent that sound and image are disparate, the jolt of fusion keeps working. In Spectrum Slice, Chion’s monsters multiply, are cut into pieces, disintegrate and re-form; a preacher with a fragment of deep talk-radio voice; then a crowd with white noise static, then video static and a snatch of orchestral strings. Associative chains and correlations form between the fragments; between the cosmic, molecular jitter of audio and visual noise, and faces in a crowd; crowd/audience and preacher/presenter.

Similar chains and networks emerge in Abject Leader’s Bloodless Landscape, again created by combinations of synchretic fusion and disjunction; and as in Spectrum Slice, the material signatures of the medium are everywhere. These works take apart the conventional anchor of synchresis – the represented body – but instead create an embodiment of their own media substrates. Sound and image operate in indexical relays that ground media forms in a material continuum: dirt, bubbles, scratches, static, celluloid, light; synchresis becomes haptic, or tactile.

Peter Newman’s work intensifies this material quality while dissolving the last fragments of indexical content into incandescent plasma. The cinematic constituent, the edited shot, is likewise obliterated into long textural dissolves; in a sort of inverse of Spectrum Slice, which trips up synchresis by multiplying the edit, Newman’s audiovisual textures tighten their relationship through immersive duration. A flickering, rhythmic synch point binds Rosebud together from the outset; but the synchretic monster here is a single entity; it intensifies as it gradually reveals (or destroys) itself, a tactile or palpable presence.

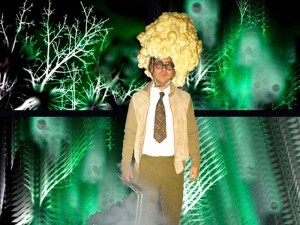

the_geek_from_swampy_creek-credits-wade-marynowsky-copyright-wade-marynowsky-released-by-demux-20071

The monstrous body makes a representational comeback in Wade Marynowsky’s work. Like Newman, Marynowsksy uses digital processes to manipulate sound and image into abstraction; but where Newman’s video seems to have been worn smooth over years, Marynowsky’s retains a hard, self-referential edge. In a post-glitch performance parody, The_Geek_From_Swampy_Creek – another monster – sways gently at his Powerbook, calling up disintegrating images of his wetland home. Unlike most of his peers, Marynowsky puts his own body up as the synchretic anchor, the common cause for sound and image. Jean Poole’s floating monkey in Cappadocia Skies is another audiovisual self-construction; author as both witness and performer, using the sure-fire synchresis of music video to re-frame experience and, again, landscape. Cappadocia Skies is also an emblem for a nomadic laptop-video culture; where images and sound are gathered, networked, recombined, processed, filtered, and beamed back into the environment. A kind of distributed, recursive, synchresis machine.

In most cases, synchresis is constructed; sound and image composed and arranged to form these spontaneous welds. However in one important strain of Australian AV, synchresis becomes automatic, inherent; and the synch point, the synchretic moment, is extended and intensified to become all encompassing. Robin Fox’s audiovisual practice began as he connected his laptop audio rig to an old analog oscilloscope. The scope renders audio signal as dynamic image, a phosphorescent trace generated automatically, according to a technically defined translation. The result is an audiovisual instrument; Fox’s audio software becomes a sound-and-image-synthesiser. In the resulting work the synchretic weld acquires new power; image and sound are tightly and immediately coupled. The sense that Newman conveys in Rosebud, of an autonomous, coherent AV entity, is extended. There’s a sense of something like revelation, as sound-forms unfold: Fox investigates an audiovisual space which is totally self consistent, yet continually surprising.

Andrew Gadow’s work approaches the same relation from the other side; his practice is based on rendering synthesised video signals as audio. Using a Fairlight CVI – a pioneering (but now archaic) Australian instrument – Gadow generates visual patterns and forms with an automatic double in modulated buzz-saw sound. Again there is a direct and immediate fusion, an intense coherence behind the abrasive surface of the work. In Gadow’s more recent work he has introduced an analog audio synthesiser, creating loops in which video is generated by the CVI, filtered by the audio synth, and returned to video. Botborg complexify these loops, wiring a video mixer into an unstable, psychotropic feedback system where the elements become indistinguishable; we see and hear video and sound in varying mixtures and causal relations.

Synaesthesia offers a promising analogy for these works, as well as an alternative model of audiovisual relations. In synaesthetic perception one modality is automatically and involuntarily translated into another. Synaesthetes may perceive letters, numbers or days of the week as inherently coloured, associate shapes with smells or tastes, or see coloured forms in response to music. The analog crossovers of Fox and Gadow seem to echo the neural cross-wiring thought to cause synaesthesia; in both cases there’s an immediate and automatic connection between sound and image. Is this some kind of machine synaesthesia? If so, can we learn it, internalise it and reconfigure our own sensorium? In the fictotheory of Arkady Botborger, crosslinked sound and image promise to open “new neural pathways” that expands perception by overwhelming or bypassing our “linguistic centres”. [2]

Artificial or induced synaesthesia is possible, but not in a few minutes of AV: it would mean living with, and adapting to, a rewired or augmented sensorium in the long term. An alternative model for these fused audiovisuals is both more realistic and more abstract. Synchresis is perceptually founded on the process of cross-modal binding, where percepts in different modes are “bound” into recognition as a single entity. Binding feels good – some neuroscientists theorise that it activates our limbic pleasure circuits. It comes with a flash of recognition, an “a-ha” moment, a sense of noesis, or revelation. Perhaps the affect of tightly fused audiovisual experiences is related to the perceptual process of binding?

What some suggest as an adaptive rationale for cross-modal binding – and the reason it feels good – is its functional role in interpreting our environment. As the newsreader shows us every night, we link sound and image into a functional, coherent model of the environment; we recognise the common cause of these correlated perceptions. Which begs the question, what is the common cause in the tightly correlated audiovisuals of artists such as Fox and Gadow? Unlike constructed forms of synchresis, in these works a single structure really does cause both sound and image: the signal, the abstract pattern of voltage fluctuations, is what we both see and hear. The artists literally feel out the domain of the signal – but also a more abstract form. Moment by moment we sense the signal’s dual manifestations in sound and image; over time, we come to sense the pattern of correspondences between sound and image. This is the map, the relation between the signal domains, which for Fox and Gadow at least is a static, technical artefact.

This map is a space of transformation; it describes how one domain connects with another. Though conceptually abstract, it is completely ubiquitous in contemporary media. Digital media are founded on transformation, for the digital is imperceptible in itself; “digital imaging,” “digital video” and “digital sound” are in a sense oxymorons, for what we sense is not the digital, but a mapping of the digital into one or more domains of sensation. These maps are essentially arbitrary, open to change; we can take image data and re-map it into sound, or text; map text into image, or three-dimensional form. If the digital has no inherent manifestation, no necessary relation to our perception, then the question of the aesthetics, or affect, of digital media, is a question of the map. In this context Gadow and Fox do something remarkable, and entirely contemporary, in their cross-wirings of image and sound; they give us a sense, literally, of the map.

Maps may be ready-made, technical artifacts, as they are for Fox and Gadow, or like Chion’s synchretic monsters, they can be invented, arbitrary, anything-at-all. Gordon Monro’s Triangular Vibrations unfolds from a computational model that generates both sound and image, and so invents a distinctive correspondence between sound and image. Here too, cross-modal perceptual cues direct us to the “common cause” here – that computational model. That model – another abstract but ubiquitous figure in digital media – becomes literally sensible; as the system slowly changes it reveals its own logics, its internal patterns of conistency, its boundaries and centers.

Oliver and Pickles’ Fijuu2 navigates a similar space – a computational map between sound and image – but here its exploration is turned over to the user. With a palette of audiovisual forms and filters, gestural controls and a sequencer, Fijuu2 demonstrates a proliferation of digital maps, some of the range of possible audiovisual “anything’s” this space contains. More monsters, and again they go for the throat, so to speak; the gestural distortions in Fijuu2 make a visceral choreography out of the abstract malleability of the digital. Drawing on the technical resources of open source game culture, this work reconfigures the default narratives of hardware-accelerated 3D into far more promising circuits of action and sensation.

Synchresis is, as Chion says, ubiquitous, commonplace. It’s one of the mainstays of Western media culture, and we take its familiar manifestations entirely for granted. A mouth, a sound, a body, a figure, a punch, a story. Our sensoria are well-wired for finding these correlations, identifying those coherences in the incoming flux of reality that tell us, something’s out there. But we’re starved, at the same time, of those perceptual moments that really matter, marked by an unfamiliar coherence, an unknown cause: what’s that? McLuhan told us years ago that art is perceptual training for the future; in this case, it’s directing us towards powerful abstractions that define the digital present. [3] Synchresis is ubiquitous and commonplace, yet when we focus, as this work demands, on that kernel of media and perception, it opens out in ways that are anything but ordinary.

Mitchell Whitelaw

Mitchell Whitelaw is an academic, writer and artist with interests in new media art and culture, especially complex generative systems and digital sound and music. His work has appeared in journals including Leonardo, Digital Creativity and Contemporary Music Review. In 2004 his work on a-life art was published in the book Metacreation: Art and Artificial Life (MIT Press, 2004). His recent work spans generative art and sonic and visual data-aesthetics. He is currently a Senior Lecturer in the School of Creative Communication at the University of Canberra.

References

[1] Michel Chion, Audio-Vision: Sound on Screen (New York: Columbia University Press, 1994), 60-63.

[2] Arkady Botborger, “Principles of Photosonicneurokinaesthography,” in Botborg, Principles of Photosonicneurokinaesthography DVD (Brisbane: Half/Theory, 2005).

[3] Marshall McLuhan, Understanding Media (London: McGraw-Hill, 1964) 64-66.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 2.5 Australia.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 2.5 Australia.